Vector Spaces Demystified: From Abstract Theory to Geometric Intuition

Vector Spaces Demystified: From Abstract Theory to Geometric Intuition

Vector spaces sound intimidating, but they're everywhere—from the 3D graphics in your favorite game to the AI recommending your next song. Let's build an intuition that will make you see vector spaces not as abstract nonsense, but as a powerful framework for understanding the world.

The Big Picture: What's a Vector Space, Really?

Forget the formal definition for a second. A vector space is simply a playground where you can add things and scale them, and these operations behave nicely.

Think of vectors as arrows in space. You can:

- • Add two arrows (place one at the tip of another)

- • Scale an arrow (make it longer or shorter)

- • And these operations follow predictable rules

Building Intuition: From Familiar to Abstract

Level 1: Arrows in 2D

Start with something visual. Draw two arrows on paper:- • (go 3 right, 2 up)

- • (go 1 right, 4 up)

You can literally see these operations. This is , your first vector space.

Level 2: Beyond Arrows

Here's where it gets interesting. Vectors don't have to be arrows: Polynomials form a vector space:- • p(x) = 2x² + 3x + 1

- • q(x) = x² - x + 4

- • (p + q)(x) = 3x² + 2x + 5

- • 2p(x) = 4x² + 6x + 2

- • f(x) = sin(x)

- • g(x) = cos(x)

- • (f + g)(x) = sin(x) + cos(x)

- • 3f(x) = 3sin(x)

The Eight Axioms: Why Rules Matter

A vector space V must satisfy eight axioms. Think of them as the "laws of physics" for that space:

Addition Axioms

- Closure: v + w is in V (you can't "escape" the space)

- Associativity: (u + v) + w = u + (v + w) (grouping doesn't matter)

- Identity: There's a zero vector 0 where v + 0 = v

- Inverse: For every v, there's a -v where v + (-v) = 0

- Commutativity: v + w = w + v (order doesn't matter)

Scalar Multiplication Axioms

- Closure: cv is in V

- Distributivity: c(v + w) = cv + cw and (c + d)v = cv + dv

- Associativity: c(dv) = (cd)v

These aren't arbitrary—violate any one, and weird things happen.

Core Concepts That Actually Matter

Linear Independence: The "Can't Fake It" Property

Vectors are linearly independent if none can be built from the others. Dependent (redundant):- •

- •

- • ← This is just !

- •

- •

Span: The "Reachable Universe"

The span of vectors is everything you can build by combining them.span{[1,0], [0,1]} = all of (the entire plane) span{[1,2]} = a line through the origin span{[1,2], [2,4]} = still just a line! (they're parallel)

Key Insight: Adding more vectors only expands your span if they're independent.Basis: The "Coordinate System"

A basis is a set of independent vectors that span the entire space. It's the minimal set you need to describe everything. Standard basis for :- •

- •

- •

- •

- •

- •

Dimension: The "Degrees of Freedom"

Dimension = number of vectors in any basis.- • Line: 1D (one direction of freedom)

- • Plane: 2D (two independent directions)

- • Space: 3D (three independent directions)

- • Polynomials of degree ≤ n: (n+1)D

Subspaces: Worlds Within Worlds

A subspace is a subset that's also a vector space. It must:

- Contain the zero vector

- Be closed under addition

- Be closed under scalar multiplication

Examples in :

- • Any line through the origin (1D subspace)

- • Any plane through the origin (2D subspace)

- • {0} alone (0D subspace)

- • All of (3D subspace)

Non-examples:

- • A line not through the origin (no zero vector!)

- • A disk (scaling can escape it)

- • Two disconnected lines (addition can escape)

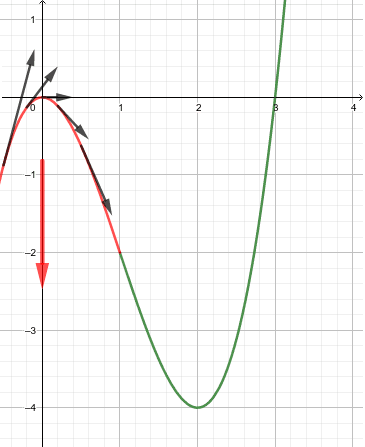

Linear Transformations: The Morphisms of Vector Spaces

A linear transformation T preserves vector space structure:

- • T(v + w) = T(v) + T(w)

- • T(cv) = cT(v)

Geometric Transformations in :

Rotation by :T([x, y]) = [x cos($\theta$) - y sin($\theta$), x sin($\theta$) + y cos($\theta$)]T([x, y]) = [x, 0]T([x, y]) = [2x, 3y]The Matrix Connection

Every linear transformation in finite dimensions has a matrix representation:T(v) = AvThe columns of A are where are basis vectors.

Real-World Applications

Computer Graphics

Every 3D rotation, scaling, and projection in games/CGI is a linear transformation on .Machine Learning

- • Data points are vectors in high-dimensional spaces

- • Features form basis vectors

- • PCA finds optimal basis for data representation

Quantum Mechanics

- • Quantum states are vectors in Hilbert spaces

- • Observables are linear operators

- • Measurement collapses to eigenvectors

Signal Processing

- • Signals are vectors in function spaces

- • Fourier transform changes basis from time to frequency

- • Compression removes components with small coefficients

Common Misconceptions Cleared Up

"Vectors must have arrows"

No! Vectors are abstract objects. Arrows are just one representation."All vector spaces are "

No! Function spaces, polynomial spaces, matrix spaces all exist."Dimension = number of coordinates"

Careful! Dimension = size of basis. You can use more coordinates than necessary (redundant representation)."Subspaces can be anywhere"

No! They must pass through the origin. A shifted subspace is called an affine space.Problem-Solving Strategy

When facing a vector space problem:

- Identify the space: What are your vectors?

- Check the axioms: Is it really a vector space?

- Find a basis: What's the simplest description?

- Compute dimension: How many degrees of freedom?

- Look for subspaces: Any interesting subsets?

- Consider transformations: How do vectors relate?

The Beautiful Unity

Here's the profound insight: Whether you're working with:

- • Arrows in 3D space

- • Polynomials

- • Quantum states

- • Solutions to differential equations

- • Color spaces in computer graphics

Practice Problems to Test Understanding

- Is the set of matrices with trace 0 a vector space?

- Find a basis for the solutions to x + y + z = 0 in

- Prove that if are linearly independent, so are

Your Journey Forward

Vector spaces are the foundation for:

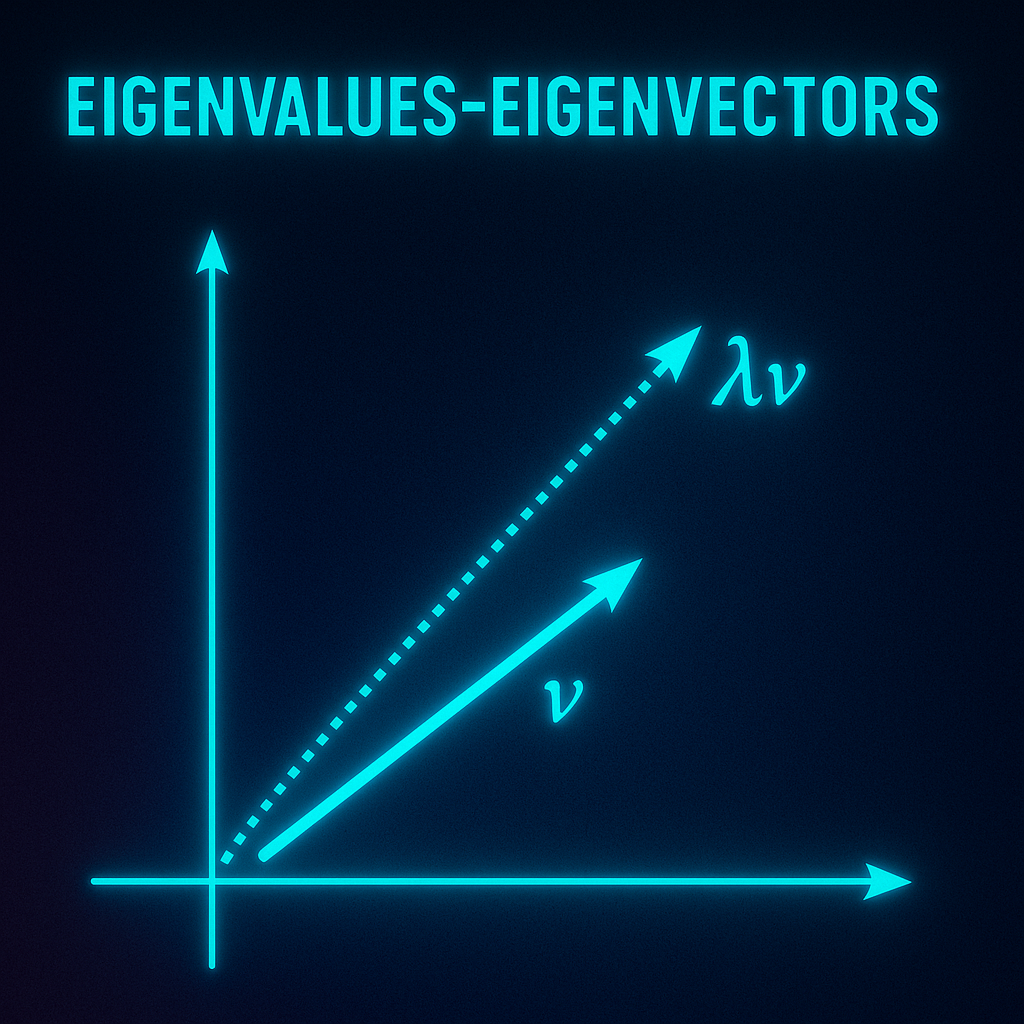

- • Linear Algebra: Eigenvalues, diagonalization, Jordan forms

- • Functional Analysis: Infinite-dimensional spaces, Hilbert spaces

- • Differential Geometry: Tangent spaces, tensor fields

- • Abstract Algebra: Modules over rings

Master Vector Spaces with Didaxa

Struggling with abstract concepts? Didaxa's AI tutor adapts to your learning style, providing:

- • Interactive visualizations for geometric intuition

- • Step-by-step proof guidance

- • Practice problems tailored to your level

- • Connections to your field of interest

Written by

Didaxa Team

The Didaxa Team is dedicated to transforming education through AI-powered personalized learning experiences.

Related Articles

Continue your learning journey

Eigenvalues and Eigenvectors: The Hidden Patterns Behind Everything

Discover how eigenvalues and eigenvectors reveal the hidden structure in data, power Google's search, and explain quantum mechanics.

Why Advanced Topics Demand Advanced AI: The Ensemble Architecture Revolution

Simple chatbots fail at advanced mathematics and complex subjects. Discover why ensemble AI architecture—multiple specialized agents working together—is the only way to master calculus, physics, and advanced topics.

Differential Calculus: From Limits to Advanced Differentiation Techniques

A comprehensive exploration of differential calculus, covering limits, derivatives, and advanced differentiation techniques with rigorous mathematical foundations.

Experience the Future of Learning

Join thousands of students already learning smarter with Didaxa's AI-powered platform.