Eigenvalues and Eigenvectors: The Hidden Patterns Behind Everything

Eigenvalues and Eigenvectors: The Hidden Patterns Behind Everything

You know that feeling when you finally understand something you've been struggling with for ages? That moment when the pieces click together and you think, "Oh! That's what everyone was talking about!" That's exactly what happened to me with eigenvalues and eigenvectors.

They sounded scary. But once you get them, you realize they're everywhere: in Google's search algorithm, in quantum mechanics explaining why atoms don't collapse, and in every machine learning model dealing with high-dimensional data.

What's the Big Idea?

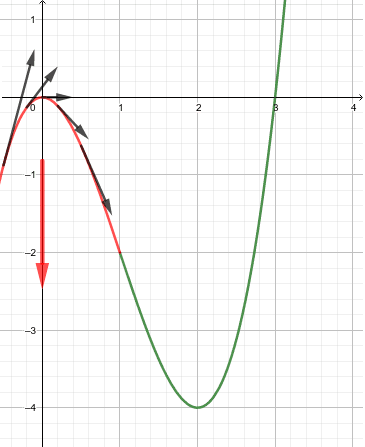

Here's the core insight: Most transformations are messy—they stretch, rotate, shear, and scramble everything. But there are special directions where the transformation just stretches or shrinks, without rotation.

Imagine you're standing in a room with a magical mirror. When most people look into it, their reflection is distorted—taller, wider, tilted. But there are special people who, when they look into this mirror, see themselves exactly the same shape, just bigger or smaller. Those special people are the eigenvectors, and how much bigger or smaller they appear is the eigenvalue.

The Mathematical Definition

For a square matrix (which represents a linear transformation), an eigenvector and its corresponding eigenvalue satisfy:

Let's unpack this equation:

- • is our transformation matrix

- • is a non-zero vector (the eigenvector)

- • is a scalar (the eigenvalue)

- • The equation says: "Applying transformation to is the same as just scaling by factor "

A Visual Example

Consider this 2×2 transformation matrix:

Let's try some vectors:

Regular vector :The direction changed! Original was at 45°, result is at ~27°.

Now try :Magic! The direction stayed the same, just scaled by 3. So is an eigenvector with eigenvalue .

How to Find Eigenvalues and Eigenvectors

Step 1: Rearrange the Equation

Starting from , we get:

where is the identity matrix.

Step 2: The Characteristic Equation

For this equation to have a non-trivial solution (i.e., ), the matrix must be singular:

This is the characteristic equation—a polynomial in whose roots are the eigenvalues!

Step 3: Find the Eigenvectors

Once you have an eigenvalue , substitute it back into and solve for .

Example: Full Calculation

Let's find eigenvalues and eigenvectors for:

So and .

Find eigenvector for :From the first row: , so

Eigenvector: (or any scalar multiple)

Find eigenvector for :From the first row: , so

Eigenvector: (or any scalar multiple)

Key Properties

1. Eigenvectors Define Directions

If is an eigenvector, so is any scalar multiple (where ). Eigenvectors define a direction, not a specific vector.2. Linear Independence

Eigenvectors corresponding to different eigenvalues are linearly independent. This means you can build a basis from eigenvectors!3. Trace and Determinant Shortcuts

For a matrix with eigenvalues :- • Trace (sum of eigenvalues):

- • Determinant (product of eigenvalues):

Diagonalization: The Power Move

If a matrix has linearly independent eigenvectors, we can diagonalize it:

where:

- • is a matrix whose columns are the eigenvectors

- • is a diagonal matrix with eigenvalues on the diagonal

And for a diagonal matrix:

Real-World Applications

1. Google PageRank

The web is a giant matrix where entry if page links to page . The importance scores form the eigenvector corresponding to the largest eigenvalue. Google computes the dominant eigenvector of a matrix with billions of rows!2. Principal Component Analysis (PCA)

When you have data with 1000 features, PCA uses eigenvectors to find the directions of maximum variance. The eigenvalues tell you how much variance each direction captures. Keep only the top few eigenvectors to reduce dimensions while preserving information.3. Quantum Mechanics

The Schrödinger equation is literally an eigenvalue problem:- • is the Hamiltonian operator

- • are the eigenfunctions (quantum states)

- • are the eigenvalues (energy levels)

4. Vibrations and Stability

- • Structural engineering: Eigenvalues determine resonance frequencies of bridges

- • Control theory: Eigenvalues tell if a system is stable (negative real parts) or unstable (positive real parts)

- • Population dynamics: Eigenvalues predict long-term growth rates

Special Types of Matrices

Symmetric Matrices

If , then:- • All eigenvalues are real

- • Eigenvectors are orthogonal

- • Always diagonalizable

Positive Definite Matrices

All eigenvalues positive ():- • Covariance matrices are positive definite

- • Crucial in optimization (guarantees unique minimum)

Markov Matrices

Rows/columns sum to 1:- • Always have as an eigenvalue

- • Corresponding eigenvector is the steady-state distribution

Common Misconceptions

"All matrices can be diagonalized"

False! Example: The matrix with 1's on the diagonal and upper diagonal has only one eigenvalue with one linearly independent eigenvector."Eigenvalues are unique"

True for values, false for vectors! Eigenvalues are unique (counting multiplicity), but eigenvectors are not—any scalar multiple works."Eigenvectors must be normalized"

False! Any non-zero scalar multiple is equally valid.The Geometric Intuition

Here's the deepest insight: Every linear transformation is just stretching along eigenvector directions.

In the eigenvector basis:

- • The transformation becomes diagonal

- • Each eigenvector direction gets scaled by its eigenvalue

- • No rotation, no shearing—just pure stretching

Master Linear Algebra with Didaxa

Eigenvalues finally making sense? Or still feeling like there's a gap between the formulas and the intuition?

With Didaxa's AI tutor, you get:

- • Interactive visualizations showing how transformations act on eigenvectors

- • Step-by-step guidance through calculations

- • Custom practice problems that build from basic 2×2 matrices to real applications

- • Connections to your field—whether you're in engineering, physics, or computer science

Written by

Didaxa Team

The Didaxa Team is dedicated to transforming education through AI-powered personalized learning experiences.

Related Articles

Continue your learning journey

Vector Spaces Demystified: From Abstract Theory to Geometric Intuition

Master vector spaces with geometric intuition and concrete examples. Understand why they're the foundation of machine learning, computer graphics, and quantum mechanics.

Why Advanced Topics Demand Advanced AI: The Ensemble Architecture Revolution

Simple chatbots fail at advanced mathematics and complex subjects. Discover why ensemble AI architecture—multiple specialized agents working together—is the only way to master calculus, physics, and advanced topics.

Differential Calculus: From Limits to Advanced Differentiation Techniques

A comprehensive exploration of differential calculus, covering limits, derivatives, and advanced differentiation techniques with rigorous mathematical foundations.

Experience the Future of Learning

Join thousands of students already learning smarter with Didaxa's AI-powered platform.